We breathe as we live, and the breathing sound can offer much information on our health, more specifically on our respiratory condition. Even before COVID-19, research has been done to use breathing sounds to detect illnesses and anomalies in the human respiratory system, which can be very useful for the elderly, infants, and anyone with a compromised ability to convey discomfort. However, traditional anomaly detection systems require sampling and running detection algorithms on the audio samples, which can potentially lead to inaccuracy and inefficiency. Instead, through segmentation into breathing cycles, the breathing audio can be fed into the anomaly detection algorithm in an event-driven approach with a greater focus on the audio.

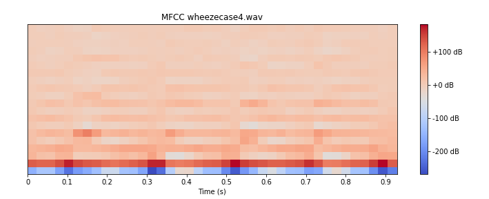

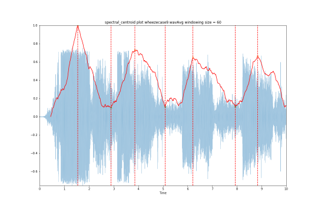

This study was done under the supervision of Professor Arindam Basu and it involved exploring audio feature extraction techniques which can be used to better detect breathing cycles. While energy and frequency-based features such as Mel-cepstral coefficients (MFCC) and chromagram can be used for audio processing, this study found that spectral features such as root-mean-square and centroid can be sufficient for a simple, fast, and effective feature to detect the breathing cycles (inhalation and exhalation). This project was awarded the CY1400 research award, an award which is given to CN Yang Scholars who did excellent work in their CY1400 research module.